[ad_1]

This 7 days is the Laptop or computer Eyesight and Pattern Recognition meeting in Las Vegas, and Google scientists have quite a few accomplishments to current. They’ve taught computer vision programs to detect the most significant person in a scene, to select out and observe individual overall body areas, and to explain what they see in language that leaves very little to the creativeness.

Initial, let’s consider the skill to discover “events and critical actors” in video clip — a collaboration involving Google and Stanford. Footage of scenes like basketball game titles includes dozens or even hundreds of persons, but only a number of are value paying out awareness to. The CV program explained in this paper uses a recurrent neural network to create an “attention mask” for just about every frame, then tracking relevance of every object as time proceeds.

More than time the program is ready to select out not only the most significant actor, but possible significant actors, and the situations with which they are related. Think of it like this: it could convey to that someone heading in for a lay-up could be significant, but that the most significant participant there is the one particular who furnishes the denial. The implications for intelligently sorting by means of crowded footage (feel airports, busy streets) are signifiacnt.

Subsequent is a additional whimsical paper: scientists have produced a CV program for getting the legs of tigers. Well… there’s a minor additional to it than that.

The tigers (and some horses) just served as “articulated object classes” — basically, objects with continually shifting areas — for the program to look at and have an understanding of. By identifying independently shifting areas and their motion and posture relative to the relaxation of the animal, the limbs can be recognized frame by frame. The progress here is that the method is capable of producing that identification throughout quite a few video clips, even when the animal is shifting in distinct strategies.

It’s not that we desperately want data on the entrance remaining legs of tigers, but once again, the skill to discover and observe individual areas of an arbitrary person, animal, or equipment (or tree, or garment, or…) is a potent one particular. Think about remaining ready to scrape video clip just for tagged animals, or persons with telephones in their palms, or bicycles with panniers. Naturally the surveillance factor will make for possible creepiness, but academically speaking the operate is fascinating. This paper was a collaboration involving the University of Edinburgh and Google.

Previous is a new skill for computer vision that may be a bit additional useful for day to day use. CV programs have very long been ready to classify objects they see: a person, a desk or surface area, a car or truck. But in describing them they may not always be as exact as we’d like. On a desk of wine eyeglasses, which one particular is yours? In a crowd of persons, which one particular is your close friend?

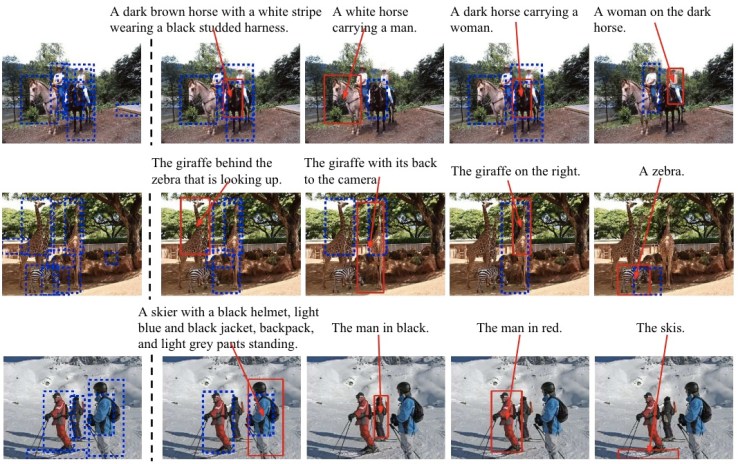

This paper, from scientists at Google, UCLA, Oxford, and Johns Hopkins, describes a new technique by which a computer can specify objects with question of confusion. It brings together some standard logic with the potent programs at the rear of picture captioning — the ones that make a little something like “a man in pink taking in ice cream is sitting down down” for a picture additional or a lot less assembly that description.

The computer appears to be like by means of the descriptors available for the objects in question and finds a blend of them that, with each other, can only utilize to one particular object. So among a team of laptops, it could say “the gray notebook that is turned on,” or if quite a few are on, it could add “the gray notebook that is turned on and exhibiting a woman in a blue dress” or the like.

It’s one particular of these points persons do continually devoid of pondering about it — of course, we can also level — but that is in actuality very difficult for computer systems. Staying ready to explain a little something to you precisely is valuable, of course, but it goes the other way: you may some day say to your robot butler “grab me the amber ale that’s at the rear of the tomatoes.”

Naturally, all three of these papers (and additional among the quite a few Google is presenting) use deep mastering and/or some sort of neural network — it’s almost a given in computer vision research these days, given that they have gotten so substantially additional potent, versatile, and easy to deploy. For the particulars of every network, nevertheless, talk to the paper in question.

Highlighted Image: Omelchenko/Shutterstock

Go through More Right here

[ad_2]

Google scientists teach AIs to see the significant areas of photographs – and convey to you about them

-------- First 1000 businesses who contacts http://honestechs.com will receive a business mobile app and the development fee will be waived. Contact us today.

#electronics #technology #tech #electronic #device #gadget #gadgets #instatech #instagood #geek #techie #nerd #techy #photooftheday #computers #laptops #hack #screen

No comments:

Post a Comment