[ad_1]

By now everybody has heard of Google’s RankBrain, the new synthetic intelligence device discovering algorithm that is supposed to be the most recent and biggest from Mountain Watch, Calif. What numerous of you could possibly not recognize, even so, is just how rapidly the Web optimization marketplace is changing due to the fact of it. In this posting, I’ll get you as a result of some apparent examples of how some of the previous regulations of Web optimization no for a longer time utilize, and what ways you can get to remain ahead of the curve in purchase to continue to present effective Web optimization campaigns for your corporations.

So what is synthetic intelligence?

There are normally 3 diverse classifications of synthetic intelligence:

- Artificial Narrow Intelligence (ANI): This is like AI for a person specific matter (e.g. beating the earth winner in chess).

- Artificial Normal Intelligence (AGI): This is when the AI can perform all points. Once an AI can complete like a human, we take into consideration it AGI.

- Artificial Superintelligence (ASI): AI on a significantly better stage for all points (e.g. further than the abilities of a one human).

When we converse about the context of Google’s RankBrain, and the device discovering algorithms that are now functioning on Google, we are chatting about Artificial Narrow Intelligence (ANI).

Actually, ANI has been all around for some time. At any time surprise how people SPAM filters get the job done in your email? Yep, that’s ANI. Right here are some of my most loved ANI programs: Google Translate, IBM’s Watson, that cool aspect on Amazon that tells you merchandise that are “recommended for you,” self-driving cars and trucks and, sure, our beloved Google’s RankBrain.

Within ANI, there are numerous diverse strategies. As Pedro Domingos evidently lays out in his book The Learn Algorithm, data researchers trying to attain the excellent AI can be grouped into five “tribes” currently:

- Symbolists

- Connectionists

- Evolutionaries

- Bayesians

- Analogizers

Google’s RankBrain is in the camp of the Connectionists. Connectionists consider that all our information is encoded in the connections concerning neurons in our mind. And RankBrain’s specific technique is what experts in the field connect with a back again propagation approach, rebranded as “deep discovering.”

Connectionists claim this technique is capable of discovering something from raw knowledge, and consequently is also capable of eventually automating all information discovery. Google seemingly thinks this, much too. On January 26th, 2014, Google announced it experienced agreed to acquire DeepMind Systems, which was, fundamentally, a back again propagation store.

So when we converse about RankBrain, we now can convey to people it is comprised of a person specific approach (back again propagation or “deep learning”) on ANI. Now that we have that out of the way, just how significantly is this field progressing? And, more importantly, how is it changing the small business of Web optimization?

The exponential advancement of technological know-how (and AI)

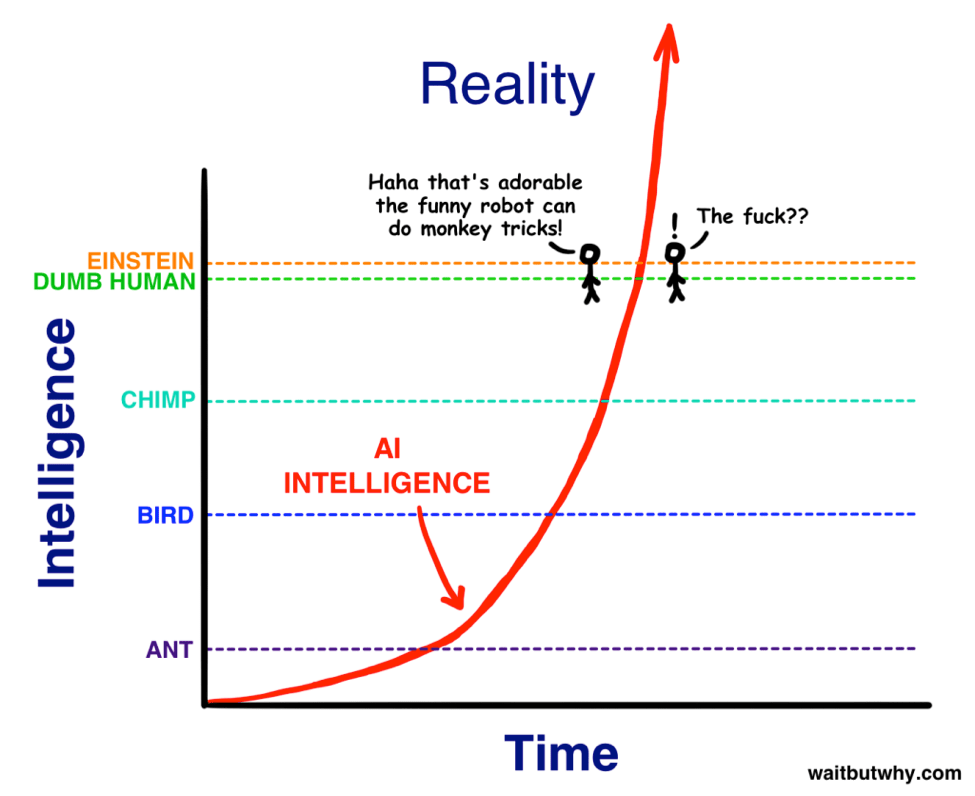

Tim City from WaitButWhy.com describes the advancement of technological know-how far better than any one in his posting The AI Revolution: The Street to Superintelligence.

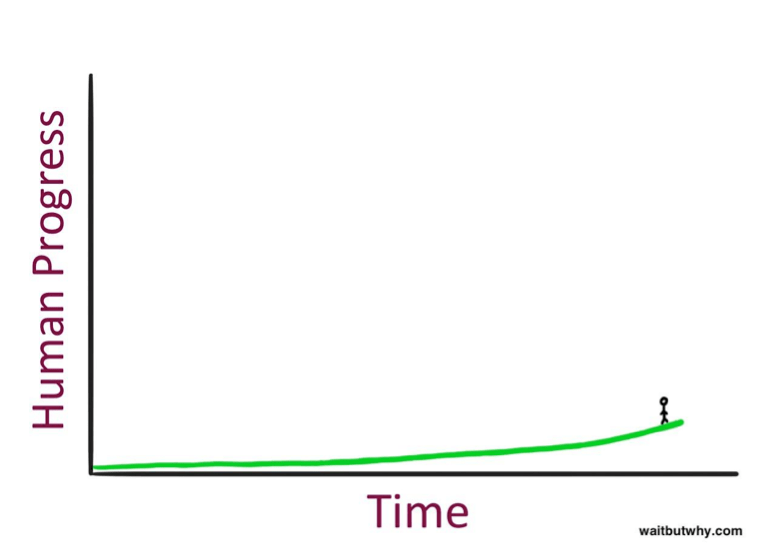

Right here is what technological progress appears to be like, when you appear back again at heritage:

But, as City points out, in fact, you cannot see what’s to your correct (the foreseeable future). So here is how it basically feels when you are standing there:

What this chart shows is that when human beings consider to predict the foreseeable future, they usually undervalue. This is due to the fact they are looking to the still left of this graph, as an alternative of to the correct.

Even so, the fact is, human progress usually takes location at a more rapidly and more rapidly rate as time goes on. Ray Kurzweil calls this the Law of Accelerating Returns. The scientific reasoning at the rear of his authentic concept is that more superior societies have the potential to progress at a more rapidly rate than fewer superior societies — because they’re more superior. Of system, the similar can be applied to synthetic intelligence and the advancement rate we are seeing now with superior technological know-how.

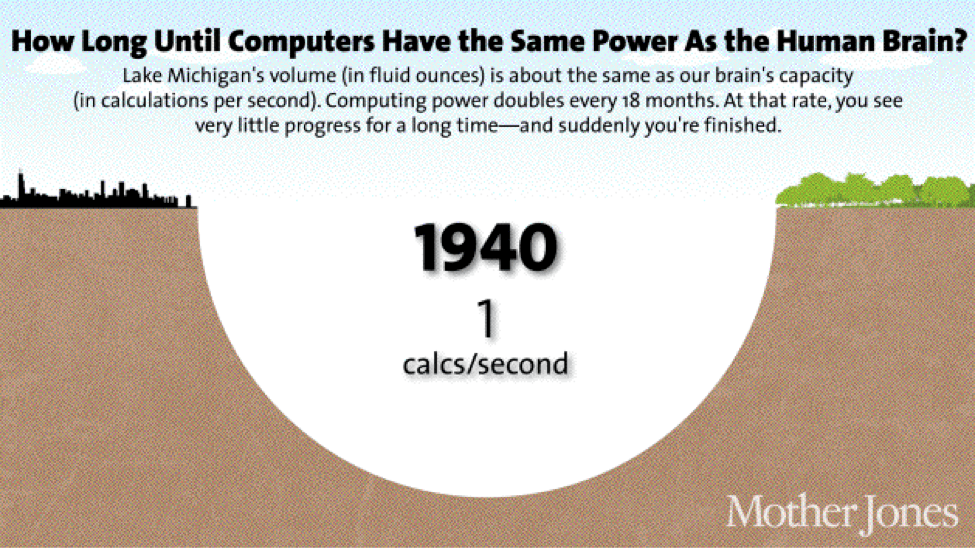

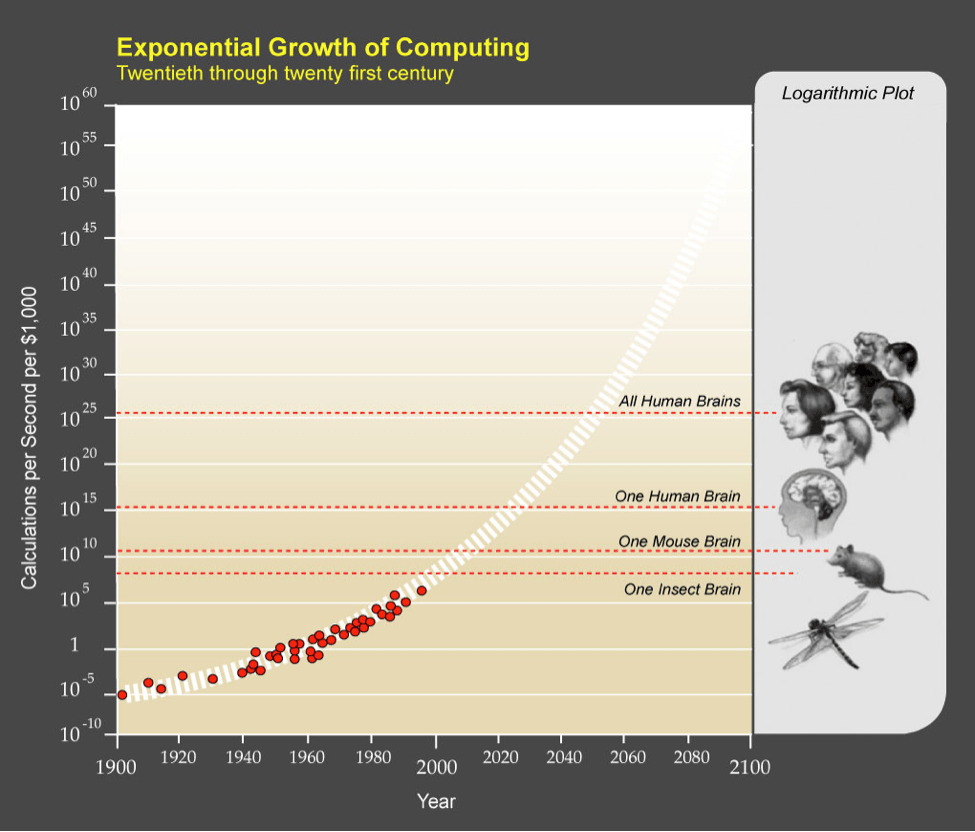

We see this with computing methods correct now. Right here is a visualization that offers you the standpoint of just how rapidly points can change because of this Law of Accelerating Returns:

As you can evidently see, and as we all can intuitively really feel, the advancement of superior processing and pcs has benefited from this Law of Accelerating Returns. Right here is one more stunning revelation: At some level, the processing electrical power for an inexpensive personal computer will surpass that of not only a one human, but for all human beings combined.

In simple fact, it now appears that we will be in a position to attain Artificial Normal Intelligence (AGI) some time all around 2025. Technology is evidently increasing at a more rapidly and more rapidly tempo, and, by numerous accounts, most of us will be caught off guard.

The increase of superintelligence

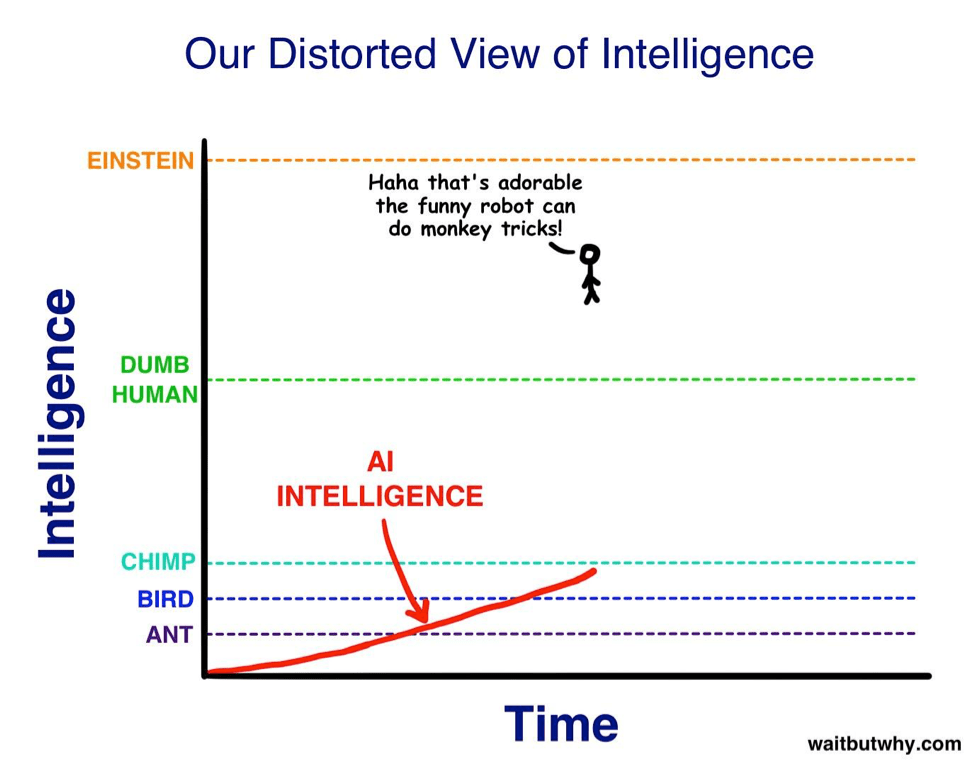

As I have explained earlier mentioned, Google’s RankBrain is just a person sort of ANI, which implies that, although it can complete points far better than a human in a person certain area, it is just that: a rather weak sort of synthetic intelligence.

But we may perhaps be blindsided by how rapidly this “weak” intelligence could possibly effortlessly change into some thing with which we have no plan how to offer.

Right here, you can evidently see that Google’s RankBrain, although tremendous clever on a person specific undertaking, is however in the common context of points, relatively unintelligent on the intelligence scale.

But what transpires when we utilize the similar Law of Accelerating Returns to synthetic intelligence? Tim City walks us as a result of the thought experiment:

“…so as A.I. zooms upward in intelligence towards us, we’ll see it as simply starting to be smarter, for an animal. Then, when it hits the least expensive capability of humanity — Nick Bostrom makes use of the term ‘the village idiot’ — we’ll be like, ‘Oh wow, it is like a dumb human. Lovable!’ The only matter is, in the grand spectrum of intelligence, all human beings, from the village fool to Einstein, are in a very compact assortment — so just immediately after hitting village fool stage and getting declared to be AGI, it’ll out of the blue be smarter than Einstein and we won’t know what strike us.”

So what does this suggest for the small business of Web optimization and the synthetic intelligence that is upon us?

Web optimization has altered forever

Just before we get into predicting the foreseeable future, let us get stock on how RankBrain has currently altered Web optimization. I sat down with Carnegie Mellon alumnus and friend Scott Stouffer, now CTO and co-founder of Market Brew, a company that presents look for engine styles for Fortune 500 Web optimization teams. As a look for engineer himself, Stouffer experienced a one of a kind standpoint over the past decade that most pros in that marketplace don’t get to see. Right here are some of his guidelines for the Web optimization marketplace when it will come to Google’s new emphasis on synthetic intelligence.

Today’s regression investigation is significantly flawed

This is the largest present-day fallacy of our marketplace. There have been numerous prognosticators every time Google’s rankings shift in a large way. Ordinarily, with out fail, a few knowledge researchers and CTOs from effectively-identified companies in our marketplace will claim they “have a explanation!” for the most recent Google Dance. The standard investigation is made up of perusing as a result of months of ranking knowledge primary up to the celebration, then seeing how the rankings shifted throughout all internet sites of diverse types.

With today’s method to regression investigation, these knowledge researchers level to a certain kind of web-site that has been afflicted (positively or negatively) and conclude with higher certainty that Google’s most recent algorithmic shift was attributed to a certain kind of algorithm (material or backlink, et al.) that these internet sites shared.

Even so, that is not how Google operates anymore. Google’s RankBrain, a device discovering or deep discovering method, operates very otherwise.

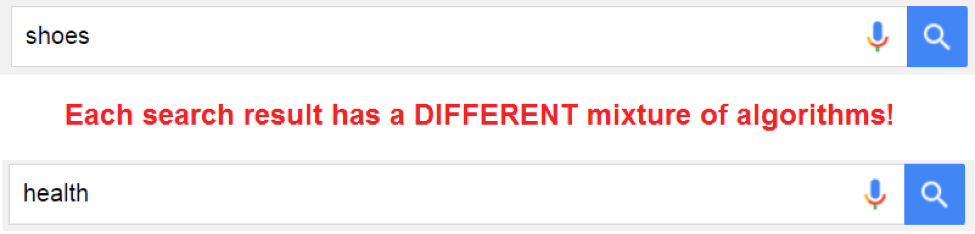

Within Google, there are a variety of core algorithms that exist. It is RankBrain’s work to master what combination of these core algorithms is very best applied to just about every kind of look for effects. For occasion, in specific look for effects, RankBrain could possibly master that the most critical sign is the META Title.

Adding more significance to the META Title matching algorithm could possibly guide to a far better searcher experience. But in one more look for consequence, this very similar sign could possibly have a horrible correlation with a fantastic searcher experience. So in that other vertical, one more algorithm, maybe PageRank, could possibly be promoted more.

This implies that, in just about every look for consequence, Google has a fully diverse blend of algorithms. You can now see why doing regression investigation over every internet site, with out owning the context of the look for consequence that it is in, is supremely flawed.

For these explanations, today’s regression investigation need to be performed by just about every certain look for consequence. Stouffer lately wrote about a look for modeling method wherever the Google algorithmic shifts can be measured. First, you can get a snapshot of what the look for engine design was calibrated to in the past for a certain search term look for. Then, re-calibrate it immediately after a shift in rankings has been detected, revealing the delta concerning the two look for engine design options. Applying this method, in the course of specific ranking shifts, you can see which specific algorithm is getting promoted or demoted in its weighting.

When human beings consider to predict the foreseeable future, they usually undervalue.

Having this information, we can then concentrate on increasing that specific section of Web optimization for web sites for people one of a kind look for effects. But that similar method will not (and simply cannot) maintain for other look for effects. This is due to the fact RankBrain is operating on the look for consequence (or search term) stage. It is practically customizing the algorithms for just about every look for consequence.

Continue to be area of interest to prevent misclassification

What Google also understood is that they could teach their new deep discovering technique, RankBrain, what “good” web sites appear like, and what “bad” web sites appear like. Comparable to how they excess weight algorithms otherwise for just about every look for consequence, they also understood that just about every vertical experienced diverse examples of “good” and “bad” web sites. This is unquestionably due to the fact diverse verticals have diverse CRMs, diverse templates and diverse constructions of knowledge completely.

When RankBrain operates, it is fundamentally discovering what the suitable “settings” are for just about every setting. As you could possibly have guessed by now, these options are fully dependent on the vertical on which it is operating. So, for occasion, in the wellbeing marketplace, Google knows that a internet site like WebMD.com is a respected internet site that they would like to have in close proximity to the prime of their searchable index. Anything that appears to be like the structure of WebMD’s internet site will be affiliated with the “good” camp. Equally, any internet site that appears to be like the structure of a identified spammy internet site in the wellbeing vertical will be affiliated with the “bad” camp.

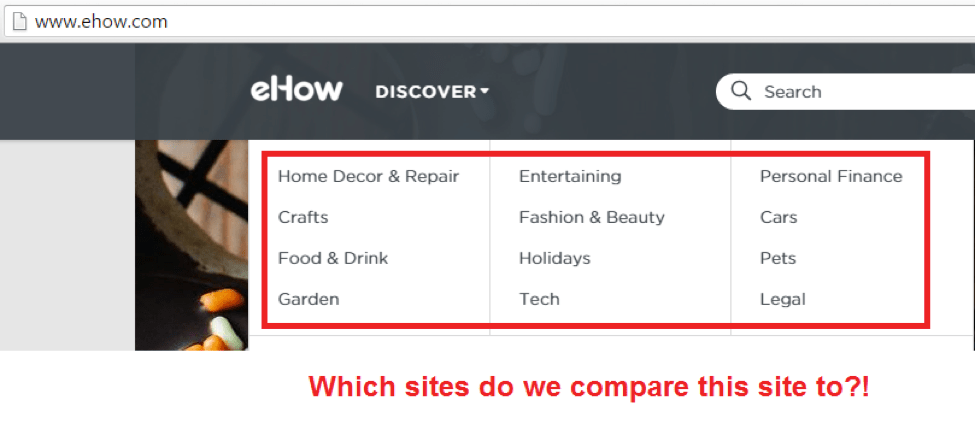

As RankBrain operates to team “good” and “bad” web sites together, using its deep discovering abilities, what transpires if you have a internet site that has numerous diverse industries all rolled up into a person?

First, we have to examine a bit more detail on how particularly this deep discovering operates. Just before grouping together web sites into a “good” and “bad” bucket, RankBrain need to initial determine what just about every site’s classification is. Web pages like Nike.com and WebMD.com are fairly simple. Even though there are numerous diverse sub-categories on just about every internet site, the common class is very uncomplicated. These types of web sites are effortlessly classifiable.

But what about web sites that have numerous diverse categories? A fantastic instance of these types of web sites are the How-To web sites. Web pages that generally have numerous broad categories of facts. In these scenarios, the deep discovering system breaks down. Which education knowledge does Google use on these web sites? The remedy is: It can be seemingly random. It may perhaps select a person class or one more. For well-identified web sites, like Wikipedia, Google can opt-out of this classification system completely, to make certain that the deep discovering system does not undercut their existing look for experience (aka “too large to fail”).

The field of Web optimization will continue to come to be very complex.

But for lesser-identified entities, what will happen? The remedy is, “Who knows?” Presumably, this device discovering system has an automatic way of classifying just about every internet site ahead of attempting to evaluate it to other web sites. Let’s say a How-To internet site appears to be just like WebMD’s internet site. Excellent, correct?

Perfectly, if the classification system thinks this internet site is about shoes, then it is likely to be comparing the internet site to Nike’s internet site structure, not WebMD’s. It just could possibly change out that their internet site structure appears to be a ton like a spammy shoe internet site, as opposed to a respected WebMD internet site, in which circumstance the extremely generalized internet site could effortlessly be flagged as SPAM. If the How-To internet site experienced separate domains, then it would be simple to make just about every genre appear like the very best of that marketplace. Continue to be area of interest.

These back links smell fishy

Let’s get a appear at how this impacts back links. Centered on the classification procedure earlier mentioned, it is more critical than at any time to stick within your “linking neighborhood,” as RankBrain will know if some thing is diverse from similar backlink profiles in your vertical.

Let’s get the similar instance as earlier mentioned. Say a company has a internet site about shoes. We know that RankBrain’s deep discovering system will attempt to evaluate just about every factor of this internet site with the very best and worst web sites of the shoe marketplace. So, the natural way, the backlink profile of this internet site will be in comparison to the backlink profiles of these very best and worst web sites.

Let’s also say that a standard respected shoe internet site has back links from the pursuing neighborhoods:

Now let us say that the company’s Web optimization group decides to commence pursuing back links from all these neighborhoods, plus a new neighborhood — from a person of the CEO’s past connections to the auto marketplace. They are “smart” about it as effectively: They build a cross-marketing and advertising “free shoe supply for all new leases” webpage that is established on the auto internet site, which then links to their new kind of shoe. Entirely pertinent, correct?

Perfectly, RankBrain is likely to see this and observe that this backlink profile appears to be a ton diverse than the standard respected shoe internet site. Even worse yet, it finds that a bunch of spammy shoe web sites also have a backlink profile from auto web sites. Uh oh.

And just like that, with out even figuring out what is the “correct” backlink profile, RankBrain has sniffed out what is “good” and what is “bad” for its look for engine effects. The new shoe internet site is flagged, and their organic site visitors usually takes a nosedive.

The foreseeable future of Web optimization and synthetic intelligence

As we can see from the past dialogue on the Law of Accelerating Returns, RankBrain and other varieties of synthetic intelligence will at some level surpass the human mind. And at this level, nobody knows wherever this technological know-how will guide us.

Some points are specific, although:

- Just about every competitive search term setting will need to be examined on its possess

- Most web sites will need to remain area of interest to prevent misclassification and

- Just about every internet site should mimic the structure and composition of their respective prime web sites in that area of interest.

In some methods, the deep discovering methodology would make points less difficult for SEOs. Figuring out that RankBrain and similar technologies are practically on par with a human, the rule of regulation is apparent: There are no more loopholes.

In other methods, points are a bit more difficult. The field of Web optimization will continue to come to be very complex. Analytics and large knowledge are the purchase of the day, and any Web optimization that is not common with these strategies has a ton of catching up to do. Those of you who have these abilities can appear ahead to a large payday.

Highlighted Impression: Maya2008/Shutterstock

Read More Right here

[ad_2]

Artificial intelligence is changing Web optimization more rapidly than you feel

-------- First 1000 businesses who contacts http://honestechs.com will receive a business mobile app and the development fee will be waived. Contact us today.

#electronics #technology #tech #electronic #device #gadget #gadgets #instatech #instagood #geek #techie #nerd #techy #photooftheday #computers #laptops #hack #screen

No comments:

Post a Comment